Leveraging AI Power: A Comprehensive Guide to Integrating OpenAI with NestJS

Introduction

Artificial intelligence (AI) technology is advancing quickly, with OpenAI leading the way. OpenAI’s powerful models can mimic human writing, answer questions, and write code. This makes OpenAI very useful for developers who want to add advanced AI features to their apps.

NestJS is a progressive NodeJS framework that is efficient, scalable, and well-structured. It uses TypeScript and supports modern JavaScript features, making it a top choice for building server-side applications.

When you combine OpenAI with NestJS, you get the best of both worlds: the smart capabilities of OpenAI’s models and the reliable structure and scalability of NestJS applications. This combination is great for creating smart APIs that need secure access and efficient processing.

Next, let’s explore the step-by-step process of setting up and securing your NestJS project with OpenAI integration.

Prerequisites

Before we dive in, ensure you have the following tools installed:

- NodeJS

- npm or yarn

- NestJS CLI

Step 1: Setting Up the NestJS Project

First, create a new NestJS project using the NestJS CLI.

nest new <your-app-name>

cd <your-app-name>

Step 2: Installing Required Packages

We’ll need to install the OpenAI SDK package.

npm install openai

Step 3: Setting Up Environment Variables

Create a .env file in the root of your project to store the OpenAI API key.

OPENAI_API_KEY=<your-openai-key>Step 4: Setting Up the OpenAI Service and Controller

1. Create a service to handle OpenAI API requests.

In this service, we use the GPT-3.5-turbo model to process user queries. The service sends user prompts to the OpenAI API and returns the generated response from the assistant.

// src/open-ai/open-ai.service.ts

import { Injectable } from '@nestjs/common';

import OpenAI from 'openai';

import { ChatCompletionMessage } from 'openai/resources/chat/completions';

import { OpenAIRequestDto } from './dtos/open-ai-req.dto';

@Injectable()

export class OpenAIService {

private openai = new OpenAI({

apiKey: process.env.OPENAI_API_KEY,

});

constructor() {}

async chatCompletion(

request: OpenAIRequestDto,

): Promise<ChatCompletionMessage> {

const completion = await this.openai.chat.completions.create({

messages: [

{

role: 'user',

content: request.prompt,

},

],

model: 'gpt-3.5-turbo',

});

return completion.choices[0].message;

}

}

2. Create a controller to handle requests to the OpenAI service.

Here, we create an API endpoint that takes a user query and generates a response using the OpenAI service. This endpoint is secured with JWT authentication to ensure only authorized users can access it.

// src/open-ai/open-ai.controller.ts

import { Body, Controller, Post, UseGuards } from '@nestjs/common';

import { OpenAIService } from './open-ai.service';

import { ChatCompletionMessage } from 'openai/resources/chat/completions';

import { ApiBearerAuth, ApiTags } from '@nestjs/swagger';

import { OpenAIRequestDto } from './dtos/open-ai-req.dto';

import { JwtAuthGuard } from 'src/auth/guards/jwt-guard';

@ApiTags('Open AI')

@ApiBearerAuth('AUTHORIZATION-JWT')

@UseGuards(JwtAuthGuard)

@Controller('open-ai')

export class OpenAIController {

constructor(private readonly service: OpenAIService) {}

@Post('generate')

async generateResponse(

@Body() request: OpenAIRequestDto,

): Promise<ChatCompletionMessage> {

return this.service.chatCompletion(request);

}

}

3. Create the DTO for the user request.

// src/open-ai/dto/open-ai-request.dto.ts

import { ApiProperty } from '@nestjs/swagger';

export class OpenAIRequestDto {

@ApiProperty()

prompt!: string;

}4. Register the OpenAI service and controller.

// src/open-ai/open-ai.module.ts

import { Module } from '@nestjs/common';

import { OpenAIController } from './open-ai.controller';

import { OpenAIService } from './open-ai.service';

@Module({

controllers: [OpenAIController],

providers: [OpenAIService],

})

export class OpenAIModule {}Step 5: Testing the API with Postman

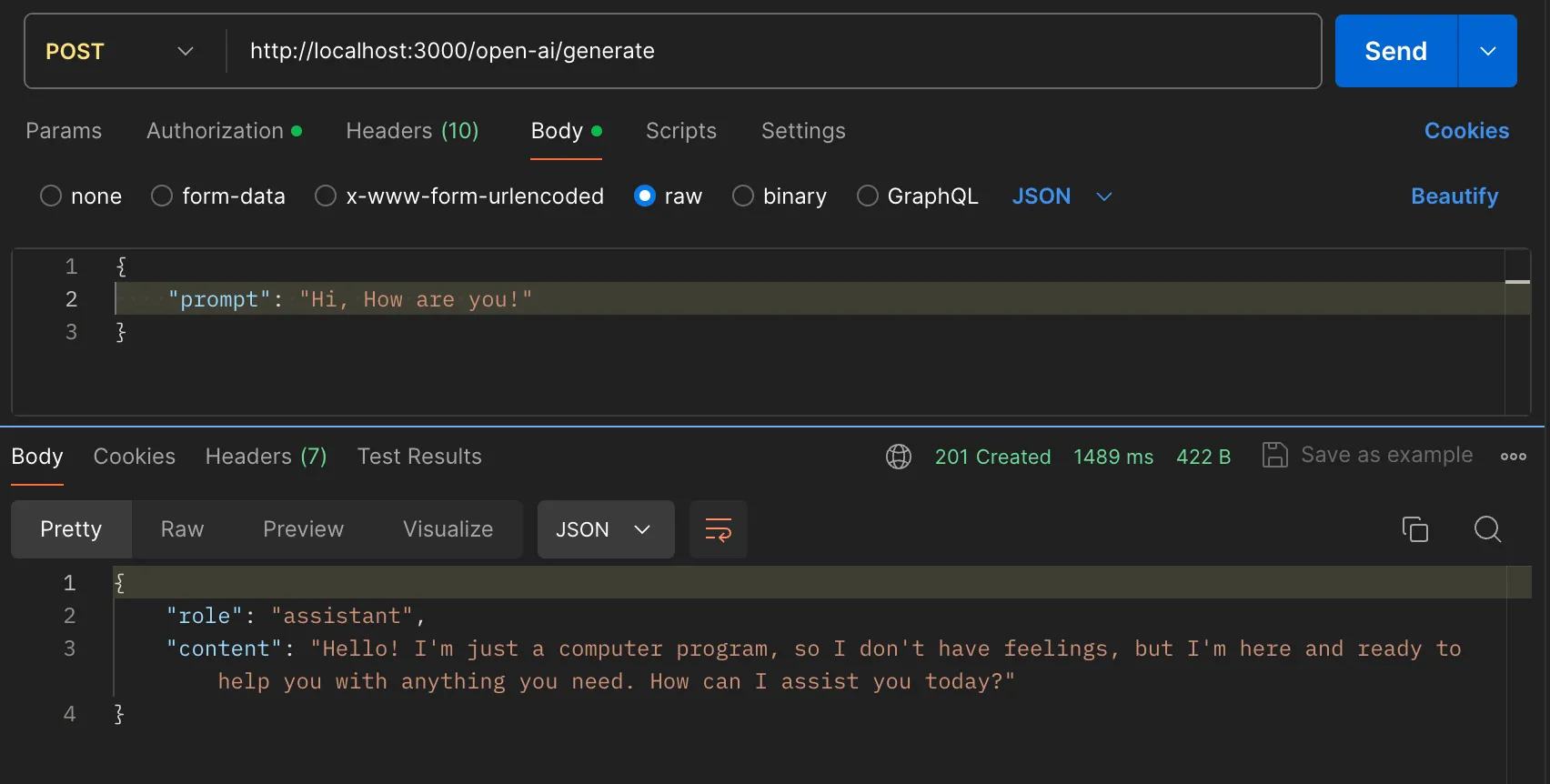

We’ll test our API using Postman to ensure everything is set up correctly

Postman Configuration:

- URL: http://localhost:3000/open-ai/generate

- Method: POST

- Headers:

- Authorization: Bearer <your-jwt-token>

- Content-Type: application/json

Below is a screenshot of the Postman setup for hitting the POST request to generate a response from OpenAI.

Step 6: Implementing Unit Tests

We’ll write comprehensive unit tests to verify the functionality and reliability of our OpenAI service and controller. These tests will help ensure our application behaves as expected under various conditions.

Testing the controller method

// src/open-ai/open-ai.controller.spec.ts

import { Test, TestingModule } from '@nestjs/testing';

import { OpenAIController } from './open-ai.controller';

import { OpenAIService } from './open-ai.service';

import { OpenAIRequestDto } from './dtos/open-ai-req.dto';

import { ChatCompletionMessage } from 'openai/resources/chat/completions';

describe('OpenAIController', () => {

let controller: OpenAIController;

let service: OpenAIService;

beforeEach(async () => {

const module: TestingModule = await Test.createTestingModule({

controllers: [OpenAIController],

providers: [

{

provide: OpenAIService,

useValue: {

chatCompletion: jest.fn(),

},

},

],

}).compile();

controller = module.get<OpenAIController>(OpenAIController);

service = module.get<OpenAIService>(OpenAIService);

});

it('should be defined', () => {

expect(controller).toBeDefined();

});

describe('Method - generateResponse', () => {

it('should call service function with the provided request', async () => {

const request: OpenAIRequestDto = { prompt: 'Test prompt' };

const expectedResponse: ChatCompletionMessage = {

role: 'assistant',

content: 'Test response',

};

const spyOnChatCompletion = jest

.spyOn(service, 'chatCompletion')

.mockResolvedValue(expectedResponse);

const result = await controller.generateResponse(request);

expect(spyOnChatCompletion).toHaveBeenCalledWith(request);

expect(result).toEqual(expectedResponse);

});

});

});

Testing the service method

// src/open-ai/open-ai.service.spec.ts

import { Test, TestingModule } from '@nestjs/testing';

import { OpenAIService } from './open-ai.service';

import { OpenAIRequestDto } from './dtos/open-ai-req.dto';

import {

ChatCompletion,

ChatCompletionMessage,

} from 'openai/resources/chat/completions';

import OpenAI from 'openai';

jest.mock('openai');

describe('OpenAIService', () => {

let service: OpenAIService;

let mockOpenAI: jest.Mocked<OpenAI>;

beforeEach(async () => {

mockOpenAI = {

chat: {

completions: {

create: jest.fn(),

},

},

} as unknown as jest.Mocked<OpenAI>;

const module: TestingModule = await Test.createTestingModule({

providers: [

OpenAIService,

{

provide: OpenAI,

useValue: mockOpenAI,

},

],

}).compile();

service = module.get<OpenAIService>(OpenAIService);

service['openai'] = mockOpenAI;

});

it('should be defined', () => {

expect(service).toBeDefined();

});

describe('Method - chatCompletion', () => {

it('should call OpenAI API and return the response', async () => {

const request: OpenAIRequestDto = { prompt: 'Test prompt' };

const mockResponse = {

choices: [

{

message: {

role: 'assistant',

content: 'Test response',

} as ChatCompletionMessage,

},

],

};

const spyOnOpenAICreate = jest

.spyOn(mockOpenAI.chat.completions, 'create')

.mockResolvedValue(mockResponse as ChatCompletion);

const result = await service.chatCompletion(request);

expect(spyOnOpenAICreate).toHaveBeenCalledWith({

messages: [{ role: 'user', content: request.prompt }],

model: 'gpt-3.5-turbo',

});

expect(result).toEqual(mockResponse.choices[0].message);

});

});

});

What Next?

Once you’ve integrated OpenAI with NestJS, the next step is to bring your AI-powered features to life. This means creating a user-friendly interface using your favorite frontend framework. Users can then interact directly with your AI tools. For example, you could design a simple chat interface or a tool that generates text to show off OpenAI’s abilities.

Using OpenAI with NestJS opens up many possibilities, allowing you to use advanced AI features in a structured and manageable way. It’s important to make sure your API is secure and thoroughly tested so users can rely on your AI services. This approach ensures users get the full benefit of your AI capabilities in a strong and efficient system.