Enhancing your Web Apps by AI integration using Next.js and OpenAI.

Leveraging the power of AI to make your web apps more attractive and powerful.

Artificial Intelligence (AI) is revolutionizing various industries, offering unprecedented opportunities for innovation. You can seamlessly incorporate AI into your web applications by harnessing the power of advanced Large Language Models (LLMs) through APIs. In this article, I’ll show you how to supercharge your Next.js app with the capabilities of OpenAI. We’ll explore creating dynamic chat completions using the OpenAI API and managing streaming responses on the front end, bringing your app to the next level of interactivity and intelligence.

Introduction to OpenAI APIs:

You’ve likely heard of ChatGPT, a chat assistant created by OpenAI that responds to user queries through prompts, sending sequences of tokens in response. OpenAI also offers APIs that you can integrate into your applications to receive responses from different LLM models that are available at OpenAI.

Getting Started

To begin, let’s create a new Next.js project

npx create-next-app@latest ai-nextjs-integrationFor demonstration purposes, we will create a basic app that generates a short note on a topic provided by the user.

Open the project directory in any IDE like VS Code. I am using VS Code for this example. Clean the boilerplate provided by Next.js and create custom components to build a basic UI.

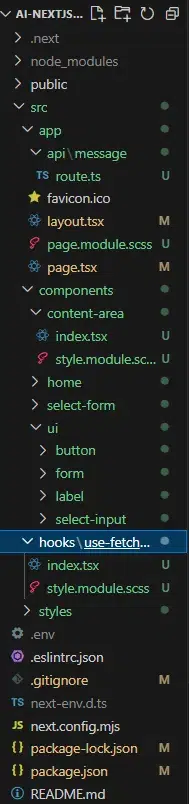

Project Structure

I am using Next.js 14 (App router) in this project and my project folder structure looks like this:

Folder Structure

Creating UI Elements

Let’s first create basic UI elements, and then we will go for AI integration by creating Next.js API routes.

I have created a components directory inside src/ directory and there I have created UI components like form, button, select-input, etc, imported from shadcn/ui as you can see in the folder structure. I have also created a Home component where we will set up some states and structure the page.

Home Component

//src/components/home/index.tsx

'use client';

import styles from "./style.module.scss";

import SelectForm from "../select-form";

import ContentArea from "../content-area";

import { useState } from "react";

import { Button } from "../ui/button";

const Home = () => {

//state to set response from AI

const[aiResponse, setAiResponse] = useState("");

return (

<>

<h1 className={styles.title}>Generate AI Powered Short Notes, In Just One Click</h1>

<div className={styles.container}>

<h3 className={styles.subtitle}>Choose a Topic: </h3>

<SelectForm setAiResponse={setAiResponse}/>

<ContentArea aiResponse={aiResponse}/>

<Button onClick={()=>setAiResponse("")} disabled={!aiResponse} className={styles.reset_button}>Reset </Button>

</div>

</>

)

}

export default Home

The two main components i.e., SelectForm and ContentArea are responsible for their respective tasks. SelectForm is responsible for handling the submission of the title and sending it to the backend endpoint that we will create to set up the OpenAI connection.

SelectForm Component

//src/components/select-form/index.tsx

'use client';

import { Button } from '@/components/ui/button';

import { Form, FormControl, FormField, FormItem, FormLabel, FormMessage } from '@/components/ui/form';

import { Select, SelectContent, SelectItem, SelectTrigger, SelectValue } from '@/components/ui/select-input';

import { zodResolver } from '@hookform/resolvers/zod';

import { useForm } from 'react-hook-form';

import { z } from 'zod';

import styles from './style.module.scss';

import { Dispatch, FC, SetStateAction } from 'react';

const FormSchema = z.object({

topic: z

.string({

required_error: 'Please select an topic.',

})

});

interface Props {

setAiResponse: Dispatch<SetStateAction<string>>;

}

const SelectForm: FC<Props> = ({ setAiResponse }) => {

const form = useForm<z.infer<typeof FormSchema>>({

resolver: zodResolver(FormSchema),

});

const onSubmit = async (data: z.infer<typeof FormSchema>) => {

//nothing to do here for now, will implement this later.

};

return (

<Form {...form}>

<form onSubmit={form.handleSubmit(onSubmit)} className={styles.form}>

<FormField

control={form.control}

name='topic'

render={({ field }) => (

<FormItem>

<FormLabel>{form.getValues().topic ? `topic selected: ${form.getValues().topic}` : "Select Topic"}</FormLabel>

<Select onValueChange={field.onChange} defaultValue={field.value}>

<FormControl>

<SelectTrigger>

<SelectValue placeholder='Select a topic to generate short notes' />

</SelectTrigger>

</FormControl>

<SelectContent>

<SelectItem value='Artificial Intelligence'<Artificial Intelligence</SelectItem>

<SelectItem value='Javascript'>Javascript</SelectItem>

<SelectItem value='Machine Learning'>Machine Learning</SelectItem>

</SelectContent>

</Select>

<FormMessage />

</FormItem>

)}

/>

<Button disabled={!form.getValues().topic} type='submit'> Generate Short Note!</Button>

</form>

</Form>

);

};

export default SelectForm;

In this component, we have created a form that takes a topic from the list of available topics from the user as input and handles the submission. Additionally, I have used some libraries like zod and react-hook-form to handle the form submission and validations. For now, we have kept the onSubmit function empty and will implement it later.

ContentArea Component

Now, the other component which is ContentArea, is responsible for displaying the AI-generated content on the screen.

The response that we get from AI is in markdown format, so to display it properly as text, we will be using a library. There is a number of libraries which does the same, but I have used react-markdown along with remark-gfm to display the markdown as text.

Let’s install both of these:

npm i react-markdown remark-gfm//src/components/content-area/index.tsx

import React, { FC } from 'react'

import styles from './style.module.scss';

import Markdown from 'react-markdown'

import remarkGfm from 'remark-gfm'

interface Props {

aiResponse: string;

}

const ContentArea:FC<Props> = ({aiResponse}) => {

return (

<div className={styles.content_root}>

{ aiResponse ?

(<Markdown remarkPlugins={[remarkGfm]}>{aiResponse}</Markdown>)

:

(<div className={styles.no_response}>Response from Ai Will Appear Here!</div>)}

</div>

)

}

export default ContentAreaFrontend Layout

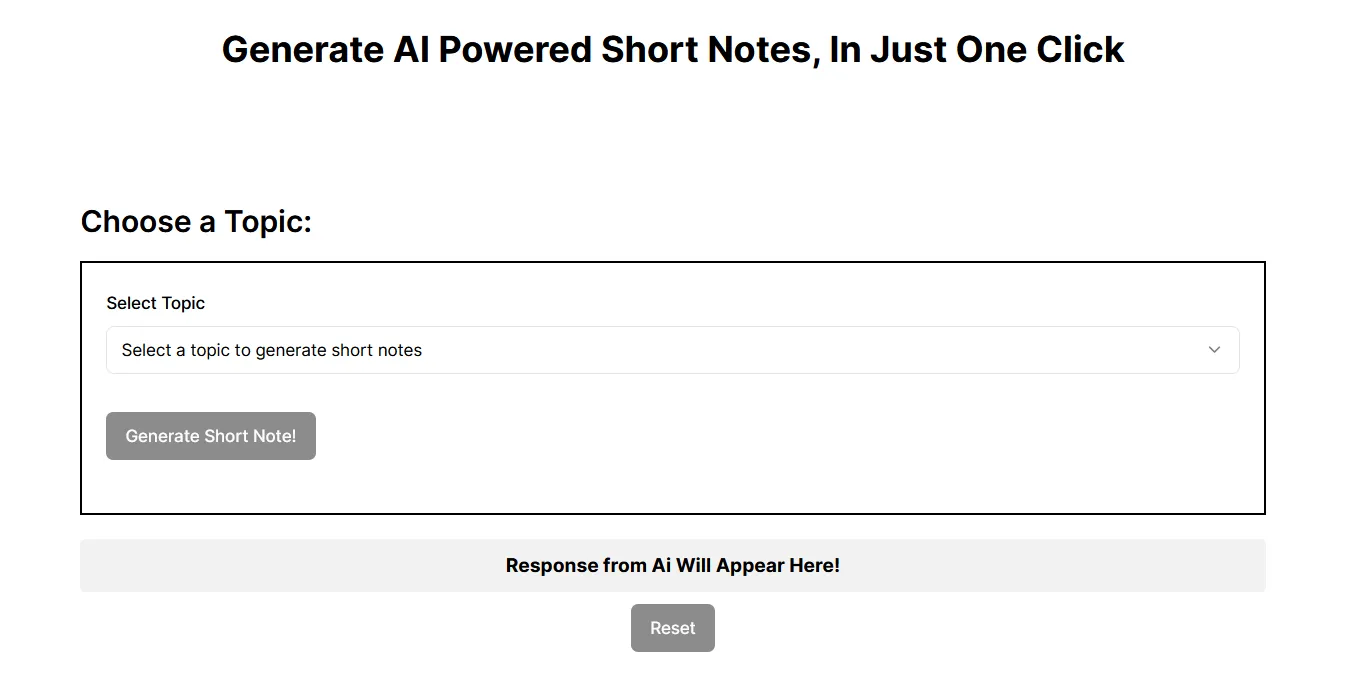

Now we are done with designing the basic layout. It looks like this :

Creating Next.js API route to set up OpenAI connection

Now, Let’s create a route named ‘message’ as /app/api/message/route.ts. Here, we will write server-side logic to connect OpenAI API generate the response based on the prompt provided by the user, and send it back as the streaming response.

It is optional to create a streaming response, you can directly just send the final output to the user, but if you want the user to know as and when the AI model is sending the output, you can generate the streaming response and the user will see the response in a way as the typewriter is writing it. This effect is also seen in ChatGPT and other AI models like Claude. AI.

To generate a streaming response, Next.js offers a very easy way to generate streaming responses as described in the docs: click to see

To set up the OpenAI API and generate a streaming response, you need to install the following libraries :

npm i openai ai‘message’ Route Handler

//src/app/api/message/route.ts

//import the necessary libraries.

import OpenAI from "openai";

import { OpenAIStream, StreamingTextResponse } from 'ai'

const openai = new OpenAI();

// in newer version, you don't need to pass the api key manually,

// you just need to add the Api key as a value of this env variable

// OPENAI_API_KEY into your project.

export const runtime = 'edge'

export async function POST(req: Request) {

const { topic } = await req.json();

//here, we are manually generating a prompt based on topic selected by user,

// but the prompt solely can be provided by the user also.

const prompt = `generate a short note between 150 to 200 words on topic: ${topic}`;

const response = await openai.chat.completions.create({

model: 'gpt-3.5-turbo', //define the model of your choice from available openai models

stream: true, // flag this true to get streamed response

messages:[{role:"user", content:prompt}],

max_tokens: 150, // limit the no. of tokens to avoid high billing costs.

})

const stream = OpenAIStream(response)

return new StreamingTextResponse(stream)

}So, we have set up the route handler which will generate the streaming response and will return it back to the front end where we can handle this.

Creating a custom hook for handling the streaming response on frontend

Now, let’s get back to the frontend interface, call the API endpoint there, and handle the AI-generated streaming response.

Handling the streaming response is a bit tricky, but here’s how you can do it.

useFetchAiResponse Hook

//src/hooks/use-fetch-ai-response/index.tsx:

import { useState, Dispatch, SetStateAction } from 'react';

import { toast } from 'sonner';

import styles from './style.module.scss';

const useFetchAiResponse = (setAiResponse: Dispatch<SetStateAction<string>>) => {

const [loading, setLoading] = useState(false);

const showToastError = (message: string) => {

toast(

<div className={styles.toast__wrapper}>

<div className={styles.toast__title}>{message}</div>

</div>,

{

duration: 5000,

}

);

};

const fetchAiResponse = async (data: { topic: string }) => {

setLoading(true);

setAiResponse("");

try {

const response = await fetch('/api/message', {

method: 'POST',

body: JSON.stringify(data),

headers: { 'Content-type': 'application/json' },

});

if (!response.ok || !response.body) {

showToastError('Error Occurred');

setLoading(false);

return;

}

//logic for handling the streaming response starts here

const reader = response.body.getReader();

const decoder = new TextDecoder();

let done = false;

// run a loop while the reader has not stopped reading the streaming response.

while (!done) {

const { value, done: readerDone } = await reader.read();

done = readerDone;

if (value) {

const text = decoder.decode(value, { stream: true });

const cleanText = text.replace(/0:"/g, '').replace(/",/g, '').replace(/"/g, '');

setAiResponse((prev) => prev + cleanText);

}

}

} catch (error) {

showToastError('Error Occurred');

} finally {

setLoading(false);

}

};

return { fetchAiResponse, loading };

};

export default useFetchAiResponse;For the separation of concerns, we have separated the logic of handling the AI streaming response inside a custom hook in src/hooks/use-fetch-ai-response/index.tsx file.

Finally, complete the ‘onSubmit’ function in the ‘SelectForm’ component

Also, we have completed the onSubmit function, which previously we have left incomplete. here’s the updated code for it :

// src/components/select-form/index.tsx

import useFetchAiResponse from '@/hooks/use-fetch-ai-response';

const SelectForm: FC<Props> = ({ setAiResponse }) => {

const form = useForm<z.infer<typeof FormSchema>>({

resolver: zodResolver(FormSchema),

});

const { fetchAiResponse } = useFetchAiResponse(setAiResponse);

const onSubmit = async (data: z.infer<typeof FormSchema>) => {

await fetchAiResponse(data);

form.reset();

form.resetField("topic");

};

return (

<Form {...form}>

<form onSubmit={form.handleSubmit(onSubmit)} className={styles.form}>

// rest of the form content

</form>

</Form>

);

};

export default SelectForm;

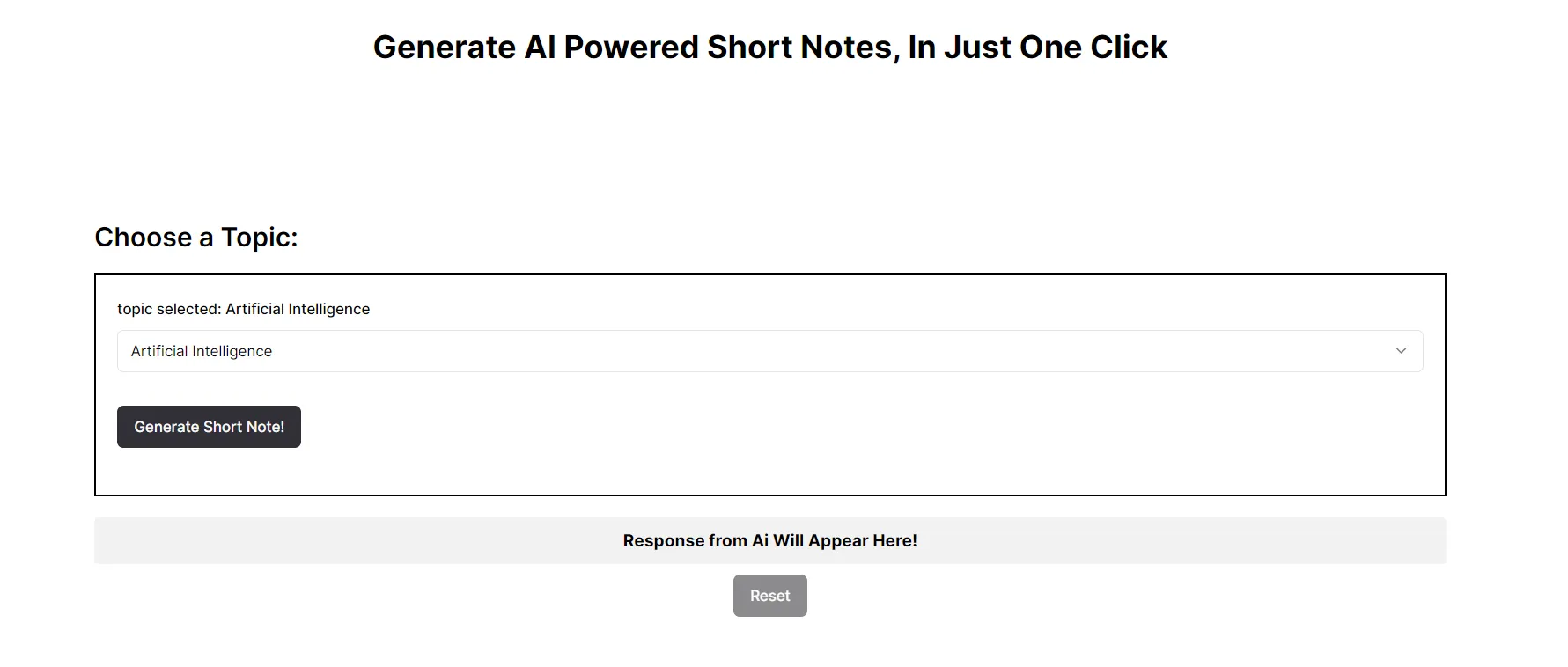

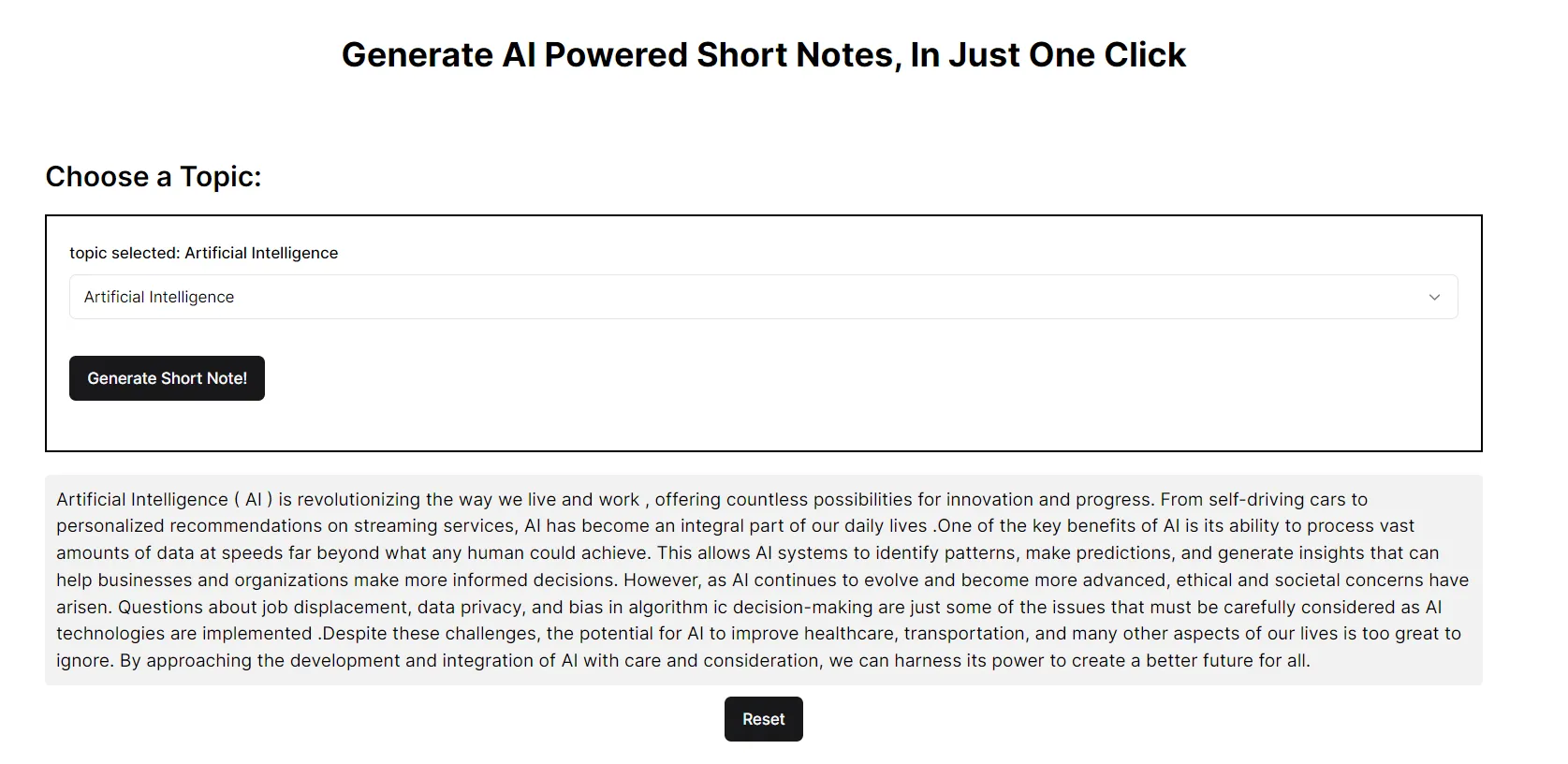

Testing the Project

Now, we have set up everything for the project, and it’s now working as expected. You just need to enter a topic on which you want to generate the short note and AI will send you the response.

Here’s a screenshot of the application where the AI has generated the response based on the user prompt :

Select the topic and click Generate Short Note!

Selecting the topic

Here’s the generated content displayed below the form :

Generated Content with AI

Conclusion:

Integrating AI into your web apps can significantly enhance their functionality and user experience. By leveraging APIs like those offered by OpenAI, you can achieve remarkable use cases and elevate your web applications to new heights.

I hope this article was useful in demonstrating how you can integrate OpenAI into your Next.js app and build amazing AI-powered web applications.